|

|

数据介绍

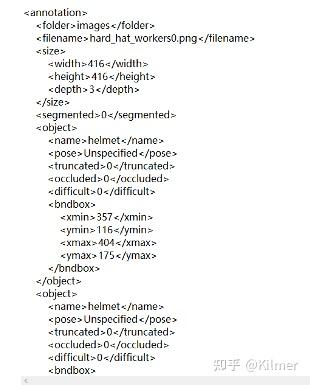

数据集共包括40000张训练图像和1000张测试图像,每张训练图像对应 xml标注文件

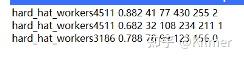

共包含3类:0:'head', 1:'helmet', 2:'person' 提交格式要求,提交名为pred_result.txt的文件:每一行代表一个目标,每一行内容分别表示 图像名 置信度 xmin ymin xmax ymax 类别

限制只能使用paddle框架和aistudio平台运行代码

总体思路:

使用paddlex框架,模型选取ppyolov2模型

!pip install paddlex

import paddlex as pdx

from paddlex import transforms as T

#数据增强

train_transforms = T.Compose([

T.MixupImage(mixup_epoch=-1), T.RandomDistort(),

T.RandomExpand(im_padding_value=[123.675, 116.28, 103.53]), T.RandomCrop(),

T.RandomHorizontalFlip(), T.BatchRandomResize(

target_sizes=[192, 224, 256, 288, 320, 352, 384, 416, 448, 480, 512],

interp='RANDOM'), T.Normalize(

mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

eval_transforms = T.Compose([

T.Resize(

target_size=320, interp='CUBIC'), T.Normalize(

mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

import os

f = open("work/total.txt", "w", encoding="utf-8")

for i in os.listdir("work/helmet/train/images/"):

voc = "annotations/" + i[:-3] + "xml"

f.write("images/" + i + "\t" + voc + "\n")

f.close()

# 最后一行是错误格式,手动删除

f = open("work/test.txt", "w", encoding="utf-8")

for i in os.listdir("work/helmet/test/images/"):

voc = "annotations/" + i[:-3] + "xml"

f.write("images/" + i + "\t" + voc + "\n")

f.close()

from sklearn.utils import shuffle

f = open("work/total.txt", "r", encoding="utf-8")

total = f.readlines()

ratio = 0.9

total = shuffle(total, random_state = 100)

train_len = int(len(total) * ratio)

train = total[:train_len]

val = total[train_len:]

f1 = open("work/train.txt", "w", encoding="utf-8")

for i in train:

f1.write(i)

f1.close()

f2 = open("work/val.txt", "w", encoding="utf-8")

for i in val:

f2.write(i)

f2.close()

f.close()

# 手动创建label.txt

#数据导入

train_dataset = pdx.datasets.VOCDetection(

data_dir='work/helmet/train/',

file_list='work/train.txt',

label_list='work/label.txt',

transforms=train_transforms,

shuffle=True)

test_dataset = pdx.datasets.VOCDetection(

data_dir='work/helmet/test/',

file_list='work/test.txt',

label_list='work/label.txt',

transforms=eval_transforms)

eval_dataset = pdx.datasets.VOCDetection(

data_dir='work/helmet/train/',

file_list='work/val.txt',

label_list='work/label.txt',

transforms=eval_transforms)

# 在训练集上聚类生成9个anchor

anchors = train_dataset.cluster_yolo_anchor(num_anchors=9, image_size=608)

anchor_masks = [[6, 7, 8], [3, 4, 5], [0, 1, 2]]

#开始训练

num_classes = len(train_dataset.labels)

model = pdx.det.PPYOLOv2(num_classes=num_classes,

backbone='ResNet101_vd_dcn',

anchors=anchors,

anchor_masks=anchor_masks,

label_smooth=True)

model.train(

num_epochs=100,

train_dataset=train_dataset,

train_batch_size=8,

eval_dataset=eval_dataset,

pretrain_weights='COCO',

learning_rate=0.005 / 12,

warmup_steps=500,

warmup_start_lr=0.0,

save_interval_epochs=5,

# lr_decay_epochs=[25, 75],

save_dir='output1/',

use_vdl=False,

early_stop=True,

early_stop_patience=5)

# 使用之前最好的模型继续训练

model.train(

num_epochs=100,

train_dataset=train_dataset,

train_batch_size=8,

eval_dataset=eval_dataset,

# pretrain_weights='COCO',

learning_rate=0.005 / 12,

warmup_steps=500,

warmup_start_lr=0.0,

save_interval_epochs=5,

# lr_decay_epochs=[25, 75],

save_dir='output2/',

pretrain_weights='output1/best_model/model.pdparams',

use_vdl=False,

early_stop=True,

early_stop_patience=5)

# 导入最好的模型,评估模型效果

model = pdx.load_model("output1/best_model")

model.evaluate(eval_dataset, batch_size=8, metric=None, return_details=False)

# 模型推理,生成的两个文本文件就是最终提交的结果

image_dirs = 'work/helmet/test/images/'

f1 = open("work/pred_result1.txt", "w", encoding="utf-8") # 只写阈值大于0.5的

f2 = open("work/pred_result2.txt", "w", encoding="utf-8") # 全部写

for image_name in os.listdir(image_dirs):

result = model.predict(image_dirs + image_name)

for i in range(len(result)):

xmin, ymin = int(result['bbox'][0]), int(result['bbox'][1])

xmax, ymax = int(xmin + result['bbox'][2]), int(ymin + result['bbox'][3])

if result['score'] >= 0.5:

f1.write(image_name[:-4] + " " + str(result['score']) + " " + str(xmin) + " " + str(ymin) + " " + str(xmax) + " " + str(ymax) \

+ " " + str(result['category_id']) + "\n")

f2.write(image_name[:-4] + " " + str(result['score']) + " " + str(xmin) + " " + str(ymin) + " " + str(xmax) + " " + str(ymax) \

+ " " + str(result['category_id']) + "\n")

f1.close()

f2.close()

最终mAP值达到62.77648。后续可以使用PaddleDetection框架进行优化,选取其中的ppyoloplus模型或者PaddleYOLO框架中的yolov5、yolov6、yolox、yolov7模型。ppyoloplus模型优化后的效果可以达到65%以上。 |

|